Graphic notation is a term used, in Western Art music, to capture the broad spectrum of non-traditional approaches to notating music that began to emerge during the twentieth century. An important component of this evolution has been the capacity to present musical scores on screen in colour and in motion. This trend is reflected both in increased academic activity[1] and the appearance of the documentation of numerous new works appearing on video [2]. While traditional music notation evolved over a long period, the recent advances in media for the presentation of notation have been rapid and therefore we should consider ourselves “still on the "steep part of the curve" from the technology standpoint”[3].

It has been argued, “the language and notation we use exerts a large influence on what we think and create”[4]. Digital innovations provide an opportunity for an expansion of the possibilities of the musical score. Composers continue to explore an increasingly broad range of idiosyncratic approaches to creating music, and many of these novel approaches, (for example: microtonality [5], pulseless music [6], algorithmically generated music [7], guided improvisation [8], Interactivity [9] and mobile structure [10]), are at best, cumbersome and, at worst, impossible to represent using traditional music notation.

This practice-led project will develop a robust platform for the investigation of musical notation and its performance. It will enhance the capabilities of the already existing Decibel Scoreplayer to include the industry standard Open Sound Control [11] (OSC) communication protocol (to facilitate synchronisation with external devices), multi-touch interaction, and a range of new models of score presentation (in addition to its current scrolling model) and integrated use of the iPad camera. These developments will allow for the exploration of new paradigms for the musical score and provide a platform for the systematic exploration of their effectiveness through the collection of relevant data on performer eye-movement in relation to the score itself.

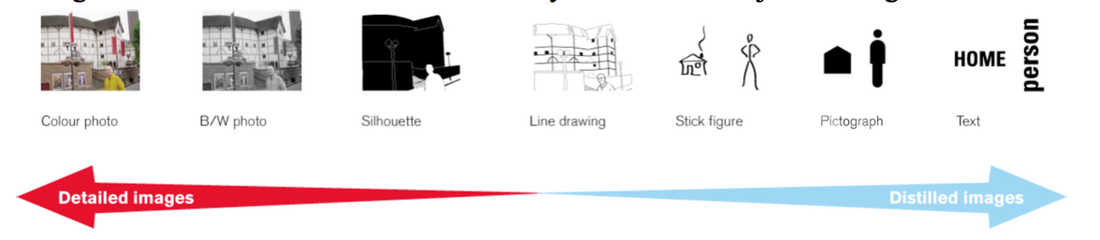

In collaboration with Dr. Stuart Medley (SCA), I will explore the effectiveness of visual representation of sounds in the music score. In a 2011 paper, Medley discusses visual representation as a continuum ranging between photographic realism and textual description.

It has been argued, “the language and notation we use exerts a large influence on what we think and create”[4]. Digital innovations provide an opportunity for an expansion of the possibilities of the musical score. Composers continue to explore an increasingly broad range of idiosyncratic approaches to creating music, and many of these novel approaches, (for example: microtonality [5], pulseless music [6], algorithmically generated music [7], guided improvisation [8], Interactivity [9] and mobile structure [10]), are at best, cumbersome and, at worst, impossible to represent using traditional music notation.

This practice-led project will develop a robust platform for the investigation of musical notation and its performance. It will enhance the capabilities of the already existing Decibel Scoreplayer to include the industry standard Open Sound Control [11] (OSC) communication protocol (to facilitate synchronisation with external devices), multi-touch interaction, and a range of new models of score presentation (in addition to its current scrolling model) and integrated use of the iPad camera. These developments will allow for the exploration of new paradigms for the musical score and provide a platform for the systematic exploration of their effectiveness through the collection of relevant data on performer eye-movement in relation to the score itself.

In collaboration with Dr. Stuart Medley (SCA), I will explore the effectiveness of visual representation of sounds in the music score. In a 2011 paper, Medley discusses visual representation as a continuum ranging between photographic realism and textual description.

Figure 1. An example of a realism continuum from (Medley 2011)

Scored forms of musical representation occupy a similar continuum, in this case between the spectrogram (a precise frequency/time/amplitude representation of sound) to text scores that simply describe the required sound.

Figure 2. An example of a musical representation continuum

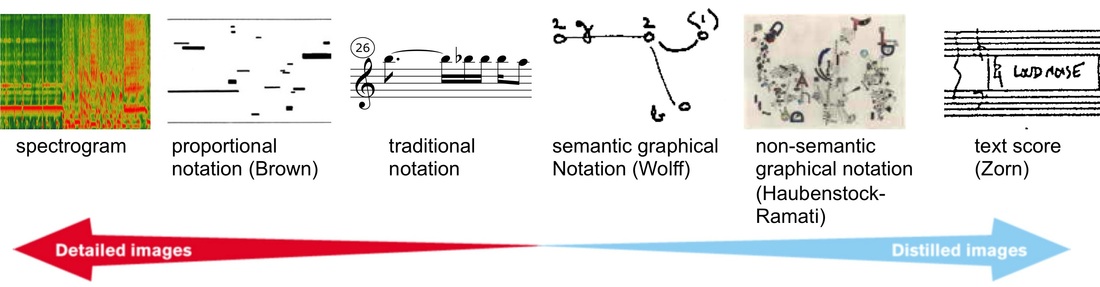

In collaboration with Dr. Medley, I will create a range of new notational approaches for scores exploring a similar continuum between literal representation of sound and figurative “evocative notation”. (Figure 3. Gives an indication of the direction this work might take).

| The research will investigate performer’s responses to these graphical strategies and establish criteria upon which to judge their effectiveness in conveying the composer’s intention. A particular focus will be the theory, proposed by neuroscientists V.S. Ramachandran and E.M. Hubbard, that “there may be natural constraints on the ways in which sounds are mapped on to objects”[12]. Evidence of such a constraint, that certain graphemes may be consistently mapped to specific sounds, would clearly be of great value to those expanding the notational pallet of the musical score. Along similar lines, I will also collaborate with colour theorist Paul Green-Armytage to investigate the potential for a mapping between sound and colour, albeit of a more general nature. Recent research by Stephen Palmer at the University of California Berkeley, suggests that there is a high degree of correlation between mappings of colour-to-sound. A recent study conducted in the United States and Mexico suggested, “faster, major music is associated with lighter, more saturated, yellower colors. Slower, minor music is associated with darker, less saturated, bluer colors”[13]. Similarly consistent results were obtained in mapping studies conducted on selections of classical orchestral music, single-line piano melodies, diverse musical genres, instrumental timbres and two-note musical intervals. The addition of colour to the musical notation creates the potential for a greater amount and variety of information to be encoded in the score. Again, evidence of a correlation between colour and sonic phenomena would be of great value to composers. This project will build of work I began in 2009 through the creation a “scrolling score player”[14] that allowed the synchronous ensemble performance of scores notating musical phenomena that were either difficult or impossible to represent with traditional music notation: such as continuous glissandi, timbral changes and lack of pulse. This “scrolling score” solution proved to be an extremely versatile model for the presentation of a range of non-traditional music notations that required synchronization between the performers, such as graphically notated scores, text scores. It became the prototype the Decibel Scoreplayer App for iPad [15] that was released on the iTunes store in 2012. The scrolling score departs from the traditional music score in a crucial way: it is the score rather than the eye that moves. The consequence of this upon the way in which the score is read is not currently understood. In traditional music, the eye interrogates the static page, fixating for varying periods on details (and as has been discovered, fixating for up 23% of the time upon blank spaces[16]. |

In collaboration with Professor Craig Speelman, I will develop an experimental method to capture eye-movement data, measuring variations in eye fixations and saccades in performers reading from the scrolling score and a number of other novel scoring methods that I have developed including realtime permutation, transformation, and generation of scores. It is hoped to gain insight into effectiveness of mapping of shape and colour to sound as well as a range of scoring strategies. We will investigate the possibility of using the iPad’s built-in camera as a means of collecting eye-movement data, allowing for precise synchronisation and time-coding of data.

Notes

[1] Contemporary Music Review Volume 29 (2010) was devoted to the discussion of Virtual Scores and Real-Time Playing; Leonardo Journal Volume 21 (2011) Beyond Notation: Communicating Music included several significant discussions of the Screenscore.

Also See:

Winkler, Gerhard E. (2004). The Real Time-Score. The Missing-Link in Computer-Music Performance. In Sound and Music Computing ʼ04. IRCAM

Hajdu, G. and Didkovsky N. (2009). "On the Evolution of Music Notation in Network Music Environments." Contemporary Music Review 28(4): 395 — 407.

Kim-Boyle, D. (2010). Real-time Score Generation for Extensible Open Forms. Contemporary Music Review, 29(1), 3 - 15.

McClelland, C., Alcorn, M. (2008). Exploring New Composer/Performer Interactions Using Real-Time Notation. In International Computer Music Conference ʼ08. Belfast, Northern Ireland

[2] See: the Icelandic collective S.L.Á.T.U.R.’s site http://animatednotation.blogspot.com.au/

[3] Dewar, J. (1998). The Information Age and the Printing Press: Looking Backward to See Ahead. Santa Monica, CA, RAND. p. 5

[4] Dannenberg, R. (1996). Extending Music Notation Through Programming. Computer Music in Context. C. Harris, Harwood Academic 63-76 p. 63.

[5] Keislar, D., Blackwood, E. et al. (1991). "Six American Composers on Nonstandard Tunings." Perspectives of New Music 29(1).

[6] Burt, W. (1991). "Australian Experimental Music 1963-1990." Leonardo Music Journal 1(1): 5-10. pp. 5-6

[7] Hudak, P., Makucevich, T. et al. (1993). "Haskore Music Notation - An Algebra of Music -." Functional Programming 1(1): 1-18.

[8] Lock, G. (2008). “What I Call a Sound”: Anthony Braxton’s Synaesthetic Ideal and Notations for Improvisers. Critical Studies in Improvisation / Études critiques en improvisation, Vol 4, No 1 (2008)

[9] Freeman, J. (2008). Extreme Sight-reading, Mediated Expression and Audience Participation: Real-time Music Notation in Live Performance. Computer Music Journal 32: 25–41.

[10] Dubinets, E. (2007). Between Mobility and Stability: Earle Brown’s Compositional Process. Contemporary Music Review 26(3): 409–426.

[11] http://opensoundcontrol.org/introduction-osc

[12] Ramachandran, V.S., and Hubbard, E.M. (2001).Synaesthesia — A Window Into Perception, Thought and Language. Journal of Consciousness Studies, 8, No. 12, 2001, pp. 3–34 p. 19

[13] Palmer, S. E., (2013). Color, Music, and Emotion (In Synesthetes and Non-Synesthetes) International Colour Association (AIC) University of Newcastle Newcastle-Upon-Tyne July 12, 2013

[14] Vickery,L.R. (2011). The possibilities of novel formal structures through computer controlled live performance . Organic Sounds in Live Electroacoustic Music. John Coulter. Auckland New Zealand. Australasian Computer Music Association. 112-117.

[15] Decibel ScorePlayer App, Apple iTunes, https://itunes.apple.com/us/app/decibel-scoreplayer/id622591851?mt=8. Accessed August 28, 2013.

[16] Madell, J., and Hébert, S. (2008). Eye Movements and Music Reading: Where Do We Look Next?. Music Perception 26(2) 157–170 p. 167

[1] Contemporary Music Review Volume 29 (2010) was devoted to the discussion of Virtual Scores and Real-Time Playing; Leonardo Journal Volume 21 (2011) Beyond Notation: Communicating Music included several significant discussions of the Screenscore.

Also See:

Winkler, Gerhard E. (2004). The Real Time-Score. The Missing-Link in Computer-Music Performance. In Sound and Music Computing ʼ04. IRCAM

Hajdu, G. and Didkovsky N. (2009). "On the Evolution of Music Notation in Network Music Environments." Contemporary Music Review 28(4): 395 — 407.

Kim-Boyle, D. (2010). Real-time Score Generation for Extensible Open Forms. Contemporary Music Review, 29(1), 3 - 15.

McClelland, C., Alcorn, M. (2008). Exploring New Composer/Performer Interactions Using Real-Time Notation. In International Computer Music Conference ʼ08. Belfast, Northern Ireland

[2] See: the Icelandic collective S.L.Á.T.U.R.’s site http://animatednotation.blogspot.com.au/

[3] Dewar, J. (1998). The Information Age and the Printing Press: Looking Backward to See Ahead. Santa Monica, CA, RAND. p. 5

[4] Dannenberg, R. (1996). Extending Music Notation Through Programming. Computer Music in Context. C. Harris, Harwood Academic 63-76 p. 63.

[5] Keislar, D., Blackwood, E. et al. (1991). "Six American Composers on Nonstandard Tunings." Perspectives of New Music 29(1).

[6] Burt, W. (1991). "Australian Experimental Music 1963-1990." Leonardo Music Journal 1(1): 5-10. pp. 5-6

[7] Hudak, P., Makucevich, T. et al. (1993). "Haskore Music Notation - An Algebra of Music -." Functional Programming 1(1): 1-18.

[8] Lock, G. (2008). “What I Call a Sound”: Anthony Braxton’s Synaesthetic Ideal and Notations for Improvisers. Critical Studies in Improvisation / Études critiques en improvisation, Vol 4, No 1 (2008)

[9] Freeman, J. (2008). Extreme Sight-reading, Mediated Expression and Audience Participation: Real-time Music Notation in Live Performance. Computer Music Journal 32: 25–41.

[10] Dubinets, E. (2007). Between Mobility and Stability: Earle Brown’s Compositional Process. Contemporary Music Review 26(3): 409–426.

[11] http://opensoundcontrol.org/introduction-osc

[12] Ramachandran, V.S., and Hubbard, E.M. (2001).Synaesthesia — A Window Into Perception, Thought and Language. Journal of Consciousness Studies, 8, No. 12, 2001, pp. 3–34 p. 19

[13] Palmer, S. E., (2013). Color, Music, and Emotion (In Synesthetes and Non-Synesthetes) International Colour Association (AIC) University of Newcastle Newcastle-Upon-Tyne July 12, 2013

[14] Vickery,L.R. (2011). The possibilities of novel formal structures through computer controlled live performance . Organic Sounds in Live Electroacoustic Music. John Coulter. Auckland New Zealand. Australasian Computer Music Association. 112-117.

[15] Decibel ScorePlayer App, Apple iTunes, https://itunes.apple.com/us/app/decibel-scoreplayer/id622591851?mt=8. Accessed August 28, 2013.

[16] Madell, J., and Hébert, S. (2008). Eye Movements and Music Reading: Where Do We Look Next?. Music Perception 26(2) 157–170 p. 167

RSS Feed

RSS Feed